- This event has passed.

Previewing Evaluation 2013

October 16, 2013 @ 11:00 am - 12:00 pm

The American Evaluation Association’s Evaluation 2013 kicks off this week in Washington DC with professional development workshops starting today (October 14, 2013) and the main portion beginning on Wednesday afternoon (October 16, 2013).

Here are some of the RTP Evaluators you will find presenting.

- Kristin Bradley-Bull

- Tobi Lippin

- Karen Peterman

- Holli Gottschall Bayonas

- Sally Bond

- Amy Germuth

- Joy Sotolongo

- Myself (Chris Lysy)

For more on what they will be presenting, and a few cartoons, continue on. Hope to see you in DC!

Kristin Bradley-Bull and Tobi Lippin

Who Knows? Engaging Laypeople in Meaningful, Manageable Data Analysis and Interpretation

Professional Development Workshop to be held in Columbia Section 8 on Wednesday, Oct 16, 8am-11am

How can evaluators simultaneously support high-quality data analysis and interpretation and meaningful participation of “laypeople” such as program participants and staff?

This workshop offers a practical look at some of the key strategies developed over a decade of facilitating these processes. Learn how to provide targeted, hands-on data analysis and interpretation training and support; develop accessible intermediate data reports; and carefully craft meeting agendas that succeed in evoking high-quality participation and analysis. This workshop will provide many take-home tools and give you a bit of hands-on experience.

Who will get the most out of your presentation?

Anyone who values – and maybe even uses — participatory approaches to evaluation (or anything data-related) but who hasn’t yet figured out how to apply these approaches specifically to the stage of analyzing and interpreting data. We are all about “how to” and will make sure people leave with concrete approaches they can apply right away.

Can you give me a little teaser?

The recipe might be: a cup each of facilitation, analysis, and training skills; a pint of trust in a group of committed people to move a piece of collective work forward in a meaningful way; and “improvisation/responsiveness” to taste.

What’s special about your presentation?

More than half the workshop will be spent with rolled-up sleeves working on exactly what we are talking about. (Did I mention we are very practical here?!)

Where would you like to refer people interested in your presentations?

We love to share what we’ve learned over, maybe, 15 years thus far of developing (and, sometimes, fumbling with) various approaches to stakeholder-engaged analysis and interpretation. Thank goodness our varied groups of stakeholders have been both flexible and willing to give a lot of feedback on their experiences! We have learned – and continue to learn – so much from them. Our website has various resources for people interested in this work: www.newperspectivesinc.org

Facilitation: An Essential Ingredient in Evaluation Practice

Think Tank Session 83 to be held in Columbia Section 11 on Wednesday, Oct 16, 6:10 PM to 6:55 PM

There are many intersections between evaluation and facilitation. In evaluation, facilitation can play a role in helping groups map a theory of change, in data collection through focus groups or other dialogues, in analysis by involving stakeholders in making meaning of the findings. While each of these steps is described in evaluation texts and the literature, less attention is given to describing facilitation approaches and techniques. Even less is written about evaluating facilitation practices, which are integral to organizational development and collaborative decision-making. Choices for facilitation methods to implement depend on the client, context, and priorities of the work, as well as the practitioner’s skill, confidence, and philosophy. This think tank brings together a group of evaluators and facilitators collaborating on a publication about these complementary practices. We hope to spark a deeper conversation and reflections among participants about the role of facilitation in evaluation and of evaluation in facilitation.

Who will get the most out of your presentation?

First, let us say that we were invited to join this think tank, so our perspectives may be somewhat different from the fabulous people who are convening all of us: Dawn Hanson Smart, Rita Fierro, and Alissa Schwartz. That said, this think tank will be particularly interesting to evaluators already intentionally using facilitation in their work.

Can you give me a little teaser?

How about a couple? We’re all thinking deeply about how facilitation is applied in an evaluation context and the implications. We are also thinking about how the field of evaluation has growth opportunities as viewed through a facilitation lens.

What’s special about your presentation?

We love AEA conferences for many reasons – among them the number of sessions and other spaces that promote dialogue among the many, many interesting and engaged people who attend. This think tank will be in World Café style. The session is taking place because all of us – facilitators and evaluators — under the able editorial leadership of Dawn, Rita, and Alissa, are working on developing an upcoming New Directions for Evaluation issue on the intersection(s) of evaluation and facilitation. We welcome AEA folk to come help shape this conversation.

Karen Peterman

Getting Ahead of the Curve: Evaluation Methods that Anticipate the Next Generation Science Standards (NGSS)

Panel Session 63 to be held in Piscataway on Wednesday, Oct 16, 6:10 PM to 6:55 PM

The state of STEM evaluation practice in the early 21st Century is in transition as we await the final release of the Next Generation Science Standards (NGSS), which will guide research and evaluation in science education in the coming years. This panel features STEM evaluation methods that have been developed and field-tested in anticipation of the NGSS. The panelists are both evaluating supplemental education programs that promote instructional practices consistent with the NGSS in middle and high school classrooms, and have taken advantage of the opportunity to explore evaluation methods that will reveal teaching and learning consistent with NGSS concepts and practices. Each panelist will share specific methods and field test results, including performance-based assessments and a classroom observation protocol supported with virtual student artifacts generated as part of a technology-supported science curriculum. With feedback from the audience, the panelists will reflect on the merits and challenges of the work.

Who will get the most out of your presentation?

STEM evaluators who are interested in NGSS and methods that anticipate this policy shift

What’s special about your presentation?

This panel is sponsored by the new STEM TIG. This is our first AEA with STEM-sponsored sessions through the TIG, and so the panel is special for that reason. Beyond that, Kim and I are excited about sharing our work and then talking with others about whether this is the direction that STEM evaluators’ work should be heading or whether there are other compelling new directions for those who specialize in STEM evaluation.

Reframing: The Fifth Value of Evaluators’ Communities of Practice

Panel Session 20 to be held in Columbia Section 1 on Wednesday, Oct 16, 4:30 PM to 6:00 PM

If You Aren’t Part of the Solution… Reframing the Role of the Evaluator

Evaluators can play a key role in changing the complex adaptive systems in which our work is embedded, but taking this responsibility necessitates reframing ideas about what it means to be an “external evaluator,” as well as the scope of our work. The presenter, a seasoned STEM evaluator, will share the ways her perspectives on the evaluator’s role have evolved as part of the ECLIPS community of practice, as well as the impact that this shift has had on her evaluation practice. Examples include (a) using participatory evaluation methods, (b) consulting with clients to create a fuzzy logic model, (c) positioning conclusions and recommendations in relation to the complex system itself; and (d) challenging clients to think about system-level change and how their projects really can make a difference. The presenter will also explore whether/how evaluators consider themselves to be active and contributing members of the complex systems they evaluate.

Who will get the most out of your presentation?

Anyone who is interested in systems theory and the impacts it can have on evaluation as a practice and/or evaluators as professionals

Can you give me a little teaser?

Evaluators should start envisioning ourselves as levers, the small changes that can result in large changes on a system. What the heck does that mean? Come to the session and find out!

What’s special about your presentation?

I think all of the panelists have studied p2i pretty extensively. We have worked hard to make our presentations beautiful and thought provoking.

Holli Gottschall Bayonas

Evaluating a Spanish Basic Language Program at a Mid-sized Southeastern University

Poster Presentation 101 to be held Wednesday, Oct 16, 7:00 PM to 8:30 PM

The author and Director of Language Instruction at a mid-sized public university are designing an evaluation of the Basic Language Program in Spanish. The Director has engaged in informal evaluation of the program since assuming the position in Fall 2009, but within the contextual factors of the university, such as adhering to the regional accreditation requirements, focusing on student learning outcomes, and the traditional program review process led by the university’s Institutional Research office, and office of the Chancellor. The presentation will document the steps involved in designing the evaluation, the design, and some preliminary results. Of particular interest will be the integration of the Student Learning Outcomes within the logic model.

Ignite Your Education: Evaluation of Teaching and Learning and Schools

Ignite Session 253 to be held in Lincoln West on Thursday, Oct 17, 1:00 PM to 2:30 PM

The Open-Response Rating Question: A Jerry Rigged Question-type in Qualtrics™ to get at What and How Much of School Initiatives

This ignite presentation will share how the author used a variation of the matrix table question-type in Qualtrics™ to ask survey respondents about other programs that may be affecting the climate in their schools. The presentation will include the context for the question, how to create the question on a survey, visuals of how the data appears on reports, and how the author has used the question type to show the plethora of education initiatives that exist in K-12 schools.

Sally Bond

Using Learning and Mentoring to Build Evaluation Capacity in 21st Century

Multipaper Session 622 to be held in Suite 2101 on Friday, Oct 18, 2:40 PM to 4:10 PM

Adult Learning Theory and Theories of Change in Evaluation Capacity Building Initiatives

Increasing demand for program accountability and results, combined with limited resources for external evaluators, puts pressure on internal program staff to assume more responsibility for program evaluation. Evaluation capacity building (ECB) initiatives target these program staff to increase their knowledge and skill at evaluating the implementation and outcomes of the programs they operate. ECB initiatives often reference the use of adult learning *principles* in specific activities; however, the theories of change underlying these initiatives rarely make use of more complex adult learning *theories.* ECB practitioners can benefit from a deeper understanding of adult learning in order to design interventions that promote deeper learning of evaluation principles and methods. This paper examines (a) the extent to which adult learning theories currently imbue theories of change in ECB projects and (b) the ways in which selected adult learning theories might inform ECB practice.

The State of Evaluation Practice in the Early 21st Century: How Has the Theme of Evaluation 2013 Influenced Our Beliefs?

Plenary Session 994 to be held in International East on Saturday, Oct 19, 4:30 PM to 5:30 PM

Over the past few days we have been conversing with our colleagues on topics that speak to our expertise, our interests, and our curiosities. Throughout, one of the filters we have applied has been our conference theme, “The State of Evaluation Practice in the Early 21st Century”. How has this filter influenced what we believe about ourselves as evaluators, about our field, and about what we can do for the groups we serve? The closing plenary will challenge us to address this question by giving us answers from a disparate group of evaluators. The panel represents variety with respect to tenure in the field, domain expertise, employment sector, and personal background. As a spur to a collective discussion, panel member will spend a few minutes sharing their thoughts about the conference theme.

Amy Germuth

Data Visualization in the 21st Century

Multipaper Session 296 to be held in International Center on Thursday, Oct 17, 2:40 PM to 4:10 PM

The History and Future of Data Visualization and its Impact on and Implications for Evaluation

This paper sets the stage for the other papers by exploring the history of data visualization, including its roots in cartography, statistics, data, visual thinking, and technology, and its impact on social sciences and society. Next, attention is given to current trends in data visualization followed by predictions for its future, with a focus on implications for evaluators and evaluation. Discussion centers on how data visualization will result in a) greater expectations among the public for transparency and data-informed decision-making, b) greater involvement of stakeholders in data mining and analysis, c) greater needs for evaluators to create systems that incorporate measurement and real-time reporting to drive the data-informed culture, and d) greater recognition by evaluators on the value of building the capacity of stakeholders to identify data needs, understand available data, and know their limitations in both analysis and interpretation, driving more serious thought regarding effective data visualization and reporting.

Independent Consultants at the Crossroad – What Independent Consultants Report as Trends and Challenges in Evaluation and Evaluation Consulting

Business Meeting Session 392 to be held in Columbia Section 7 on Thursday, Oct 17, 6:10 PM to 7:00 PM

This presentation will : (1) Provide an overview of independent consulting within the broader sphere of evaluators; (2) Present the results of a web survey conducted in December 2012 to better understand what independent consultants as identified via membership to the AEA ICTIG. Responses from 140 members were qualitatively analyzed to identify what they perceive as future trends in evaluation and evaluation consulting over the next five years and the challenges they currently face; (3) Report on ways in which the ICTIG might move to better support independent evaluation consultants, including what training ICTIG members reported would be most useful; and (4) Conclude with a summary and discussion of the implications of the survey responses.

Joy Sotolongo

An Apple a Day Keeps the Evaluator Away? Engaging Health Care Providers in Evaluation of Community-Wide Teen Pregnancy Prevention Initiatives

Panel Session 423 to be held in Kalorama on Friday, Oct 18, 8:00 AM to 9:30 AM

A Gathering of Unusual Suspects: One County’s Evaluation of Increased Access to Teen Health Services

This presentation will describe contributions from an array of multi-disciplinary partners for an evaluation of the President’s Teen Pregnancy Prevention Initiative demonstration project in Gaston County, North Carolina. In addition to the usual suspects (health care staff), unusual suspects (advertising professionals, county planners, youth development programs) and teens themselves conduct evaluation activities. The presentation will describe the role of each contributor, the type of data they bring to the table, and how their multiple perspectives provide a more complete and interesting picture of the project’s experiences with increasing teen access to health services. Examples of data from the multi-disciplinary perspectives will include social marketing web analytics; detailed census tract maps; pre/post survey results; and depiction of teen experiences in their own voices.

Chris Lysy

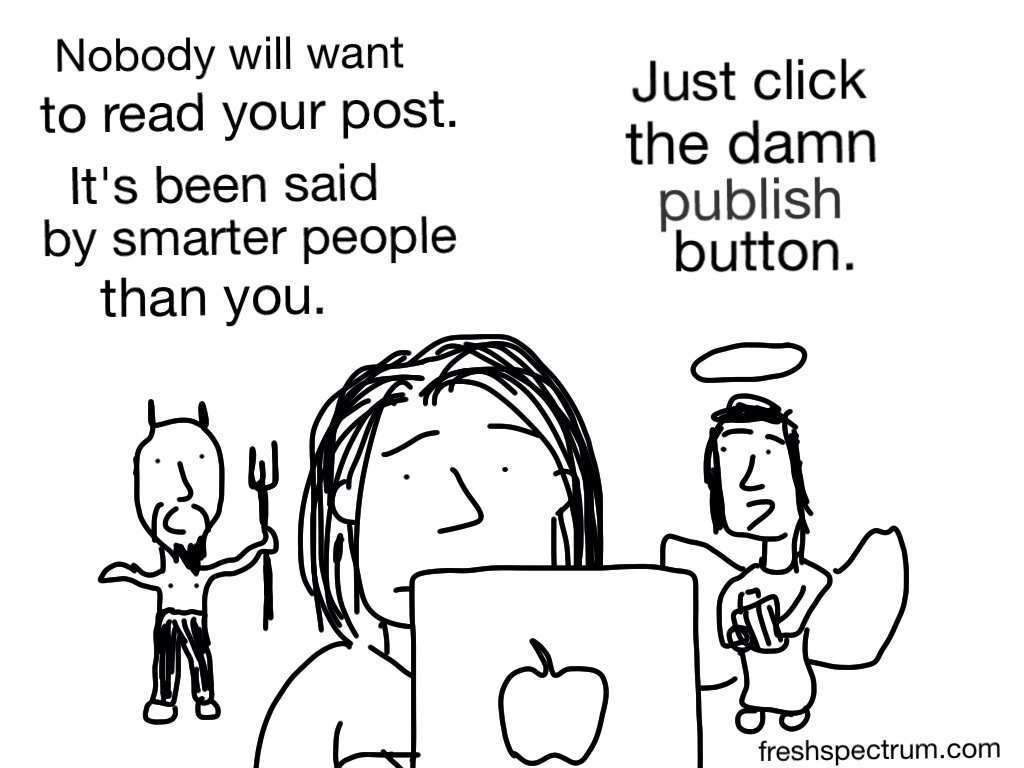

Evaluation Blogging: Improve Your Practice, Share Your Expertise, and Strengthen Your Network

Think Tank Session 770 to be held in International Center on Saturday, Oct 19, 9:50 AM to 10:35 AM

Want to start blogging about evaluation, but not sure where to start? Started, but want to know what to expect (or what to do next, or how to keep it going)? Ready to take your independent consulting practice to the next level? Or just want to have fun with a new way of communicating with fellow evaluators? In this Think Tank session, you will hear from bloggers with varying degrees of blogging experience who blog through a variety of channels and formats — personal blogs, blogging on behalf of an employer, writing for AEA365, blogging through cartoons and videos, or blogging by guest-posting or co-authoring blog posts. Facilitators will share strategies for success and address potential concerns relevant to both novice and veteran bloggers in an interactive format with break-out groups and opportunities for participants to ask specific questions. We’ll end with a discussion of collaboration across the blogging community.

Who will get the most out of your presentation?

Evaluators who are blogging, or thinking about blogging.

What’s special about your presentation?

The range of experience. Sheila Robinson (http://sheilabrobinson.com/) has been blogging for about a year. Ann Emery (http://emeryevaluation.com) for a couple years. Myself (freshspectrum.com and evalcentral.com) and Susan Kistler (http://aea365.org/) for more than a few years. The session will be discussion focused and only loosely structured, so come with questions!